Affiliate Disclosure: This post may include affiliate links. If you click and make a purchase, I may earn a small commission at no extra cost to you.

Before jumping directly to the difference between computer storage and memory, I want to give you a brief overview of what’s happening under the hood. My digital electronics teacher used to teach us the concept in the same way. So, let’s try it here.

Due to limited internal memory (registers and cache), the CPU is generally always in need of external memory to work. Modern computers use external memory called RAM (Random Access Memory). As the name suggests, it gives the CPU a random memory location, which is slower than its own cache but still usable.

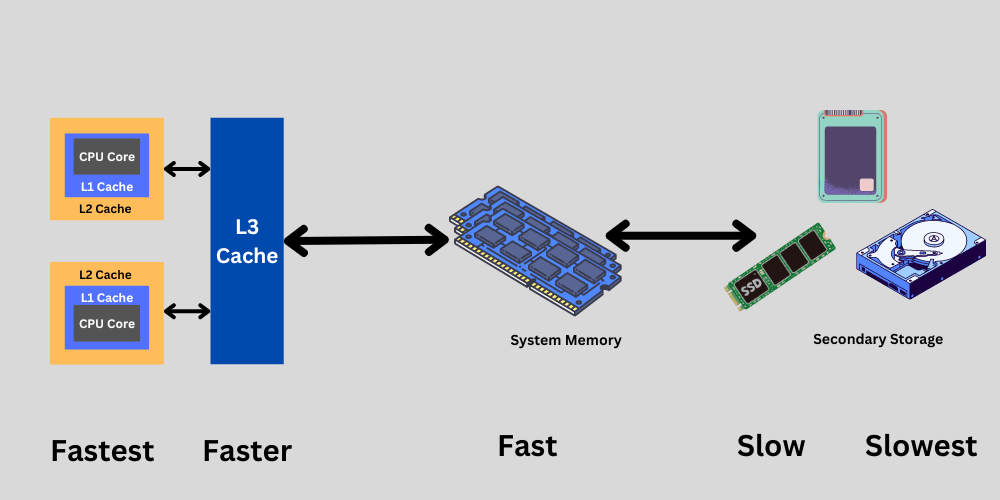

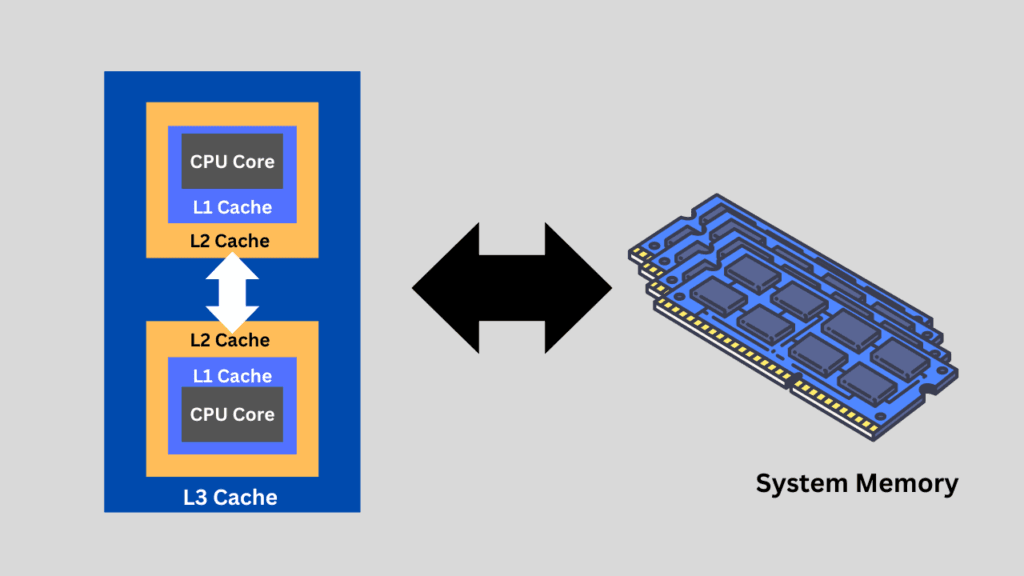

A rough overview of the CPU, memory, and storage architecture is below.

The CPU cache is not only limited but also quite expensive. A much smaller area of the CPU’s memory is called the Register, and it is inside the cores or basically an integral part of the CPU.

Cache memory acts as an intermediary between CPU Cores and RAM. RAM (or DRAM) acts as an intermediary between the CPU and Primary Storage (SSD or HDD). Therefore, if the data flows from the registers into cache storage, it would then automatically go to RAM. If it flows out from the RAM as well, it will go into the storage. However, this doesn’t always happen. The CPU might store some data in permanent storage or actively retrieve it even when the RAM is free. Memory management is a completely different technique and we will not cover that here.

One this is for sure that if data needs to be stored permanently, we use computer storage devices such as hard drives and SSDs. The raw data is also retrieved from storage devices as required and first loaded into RAM for the CPU to process.

How does Computer memory work?

Since the cache memory is inside the CPU and we have no control over it, there is a limit to how much it can be scaled. Your computers also have ROM (Read-Only Memory), but this again is a topic for another discussion. Today, we will discuss the RAM directly.

Random Access Memory (RAM) is a type of temporary (volatile) memory designed to work faster but not for permanent storage. It offers greater storage capacity than the CPU’s cache, while providing faster performance than SSDs or HDDs. The CPU can access it randomly, which is another reason it is so fast.

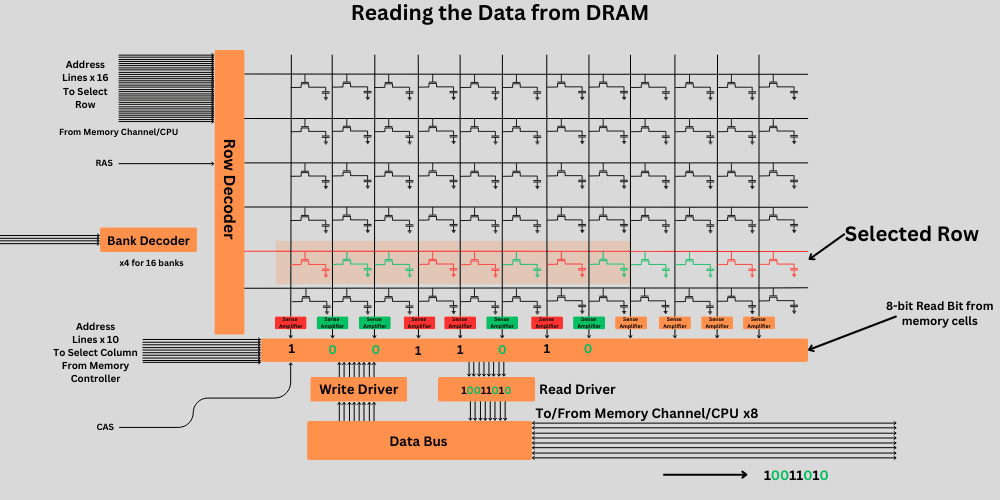

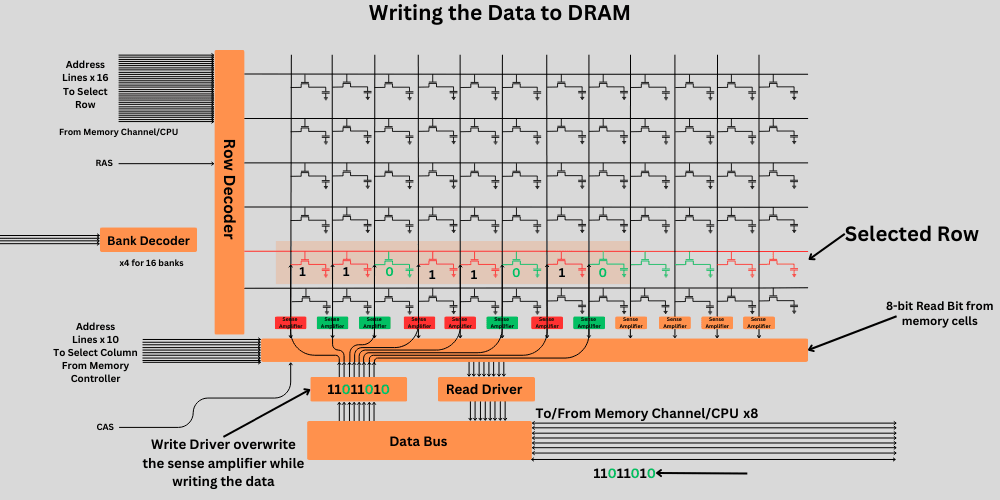

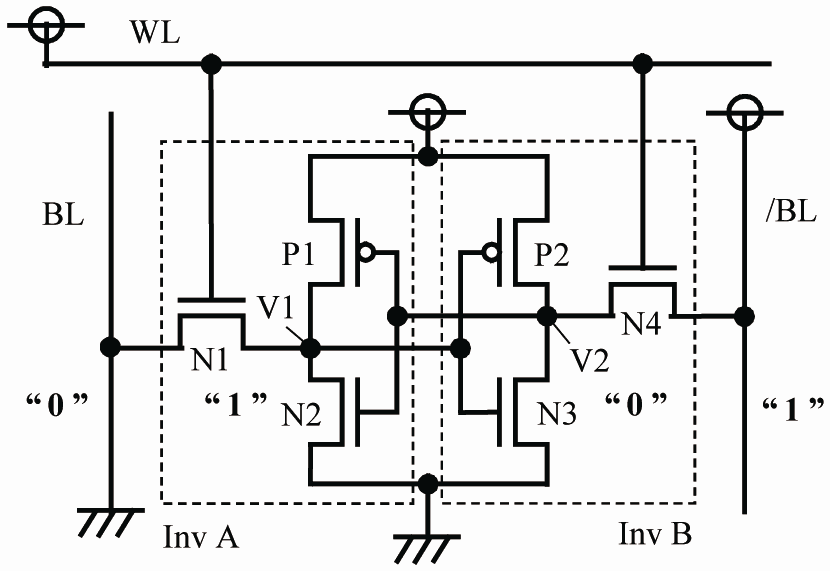

RAM uses capacitive storage, which requires continuous charging or refreshing to retain the stored information. Capacitors are prone to current leakage, and data is stored as electric charge. Therefore, the status of these capacitors must be checked frequently, and appropriate high or low voltage must be applied to retain the charge and, consequently, the data.

The charged state of a capacitor is considered high (1), and the discharged state is considered low (0). When we combine Thousands, Millions, and even billions of these microscopic capacitors, we can create a random-access memory that is suitable for working alongside your CPU.

Imagine your CPU has a large calculation in hand, involving thousands of steps with millions of bits and carries to re-collect and re-calculate. Perhaps the cache memory isn’t enough to store all the required data.

Now, the CPU will use the RAM to store those larger data sets and reuse them for calculations. You may have realized the second reason why this would slow down the processing. Yes, the distance between the data and the CPU has increased. However, RAM is significantly faster and more responsive than any of the fastest SSDs available.

How does computer storage work?

Permanent or Primary Storage in a computer is used whenever necessary. It is not like RAM, which helps with real-time processing. When we open a program, the necessary files are loaded from our storage device to the RAM. The system may later require more files or rewrite something if needed. So, it is like an accountant keeping track of the changes and providing the necessary data to your system.

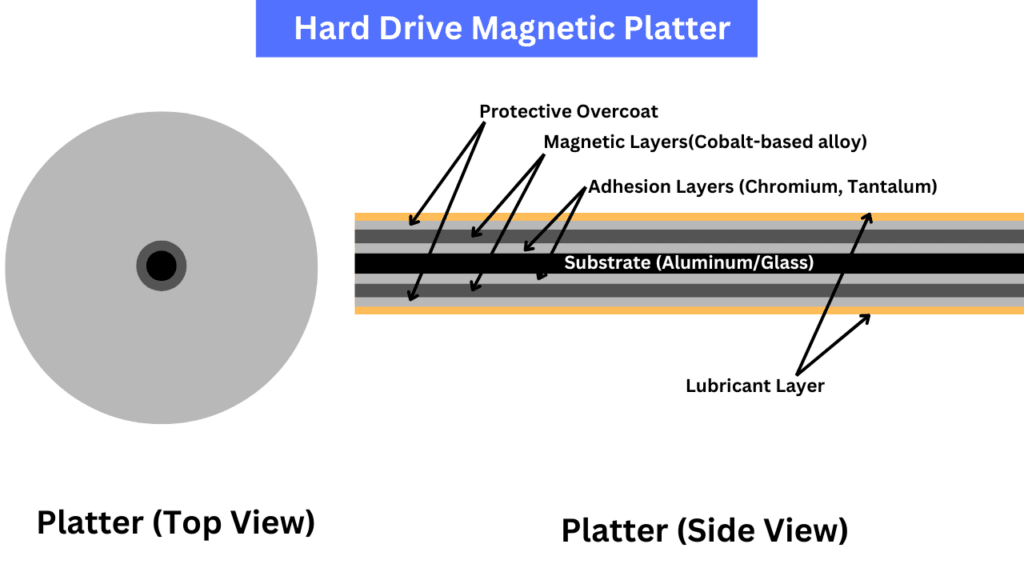

If the primary storage drive is a hard drive, it would be sent to the HDD controller, which would write it bit by bit by switching the N and S sides on a magnetic platter. For retrieval, the controller would check these states using the read/write head and return the data.

In the case of SSDs, the SSD controller takes the inputs and programs or writes the NAND Flash cells bit by bit, while also recording the location of each bit. For retrieval, it reads the data from the area and sends it back to the CPU.

Now, the distance between the origin and the target storage has increased even more. So, latency increases. Additionally, permanent storage devices are significantly slower than RAM. However, we are acquiring additional storage space to hold the data in the long term, both internally and externally. So, we compromise the speed for this benefit. However, modern SSDs are pretty fast and eliminate the risk of storage bottlenecks.

Difference between Computer Memory and Storage

There are several key differences between computer memory and storage that we should address in our article.

| Aspect | Computer Memory (RAM) | Storage (Hard Drive, SSD) |

|---|---|---|

| Definition | Temporary data storage used by the CPU | Permanent data storage for long-term use |

| Type of Data | Volatile: Data is lost when power is turned off | Non-volatile: Data is retained even when power is off |

| Access Speed | Very fast, measured in nanoseconds | Slower than RAM, measured in milliseconds |

| Cost | More expensive per GB | Less expensive per GB |

| Capacity | Typically smaller capacity (GB to a few TB) | Larger capacity (from GBs to multiple TBs) |

| Function | Holds data and instructions that CPU actively uses | Stores operating system, applications, and user data |

| Primary Components | Made up of integrated circuits (ICs) | Consists of spinning disks or solid-state memory chips |

| Usage | Used for running programs, processes, and tasks | Used for storing files, documents, and applications |

| Physical Location | Located on the motherboard | Usually housed externally or internally in a computer |

| Persistence of Data | Data is lost when power is turned off | Data is retained even when power is turned off |

| Performance Impact | Can significantly impact system performance | Typically has less impact on system performance |

Purpose of Computer Memory

As the scale of computers increases, the data they have to work with grows larger. To have 2 to 3 tabs of Google Chrome (Text) open at a time, you need around 300 MB of RAM. The more processing your system has to do, the more RAM it requires.

We can’t have that much cache inside our CPU, as it would make it too expensive and bulky. Therefore, we add temporary memory that holds the information the CPU is currently working on.

Therefore, we can consider the computer memory a slower extension of the cache. It is a larger pool, albeit at the expense of speed.

It is designed not to retain data permanently, but to provide the CPU with the resources needed to support all the software that must run for our computers to function correctly.

Purpose of Computer Storage

Once the problem of storing large chunks of temporary data is solved, we need a secure location to store permanent data that remains intact when the system shuts down. Otherwise, we won’t be able to store our photos or videos on our computers.

For that, we use computer storage. Again, the data is stored as bits, but the space has been increased. Now, we are talking in thousands of gigabytes. In 2024, the prominent permanent computer storage devices are Hard Drives and solid-state drives.

The primary purpose is to hold the OS and software data. These storage devices can release that data whenever required and load it onto the RAM. These drives also facilitate organization, backup, recovery, and data sharing.

They are also helpful for capacity expansion and storing big multimedia files.

Speed of Computer Memory

Generally, writing to and reading from computer memory (RAM) comprises four steps, i.e.

- Memory Addressing

- Address Decoding

- Row Activation

- Column Access

- Data Transfer

- Write Confirmation (In case of Writing)

Now, if we examine the DDR4 RAM, it takes approximately 10 to 15 clock cycles to complete either of these operations.

To compare, the L1 cache generally requires 1 to 2 clock cycles for the same. The L2 cache typically takes 5 to 15 clock cycles.

As the DDR (Double Data Rate) increases, so does the speed. However, due to fundamental design barriers, the RAM can never reach the speed of the cache.

Memory speed is typically denoted in megahertz (MHz) or gigahertz (GHz). For example, a memory module with a speed of 3200 MHz means that it can operate at a frequency of 3200 million clock cycles per second.

Speed of Computer Storage

The process of writing data to a computer storage device varies depending on the device type. As we discussed above, HDD stores data mechanically on the magnetic platters. On the other hand, SSDs use transistor-based memory, which is much faster than HDDs.

Due to the mechanical components and the inherently slower write speed, the maximum read/write speed of any hard drive is approximately 250 MB/s. This speed is directly proportional to the RPM at which the magnetic platter can rotate. However, hard drives are available at a lower price per GB.

The SSD technology, on the other hand, is rapidly improving. The Gen 5.0 drives are here, and SSDs like the Samsung 9100 Pro and WD Black SN8100 are achieving speeds beyond 10GB/s in sequential data read and write. Although the random and sequential read/write numbers will always be significantly apart, that is a discussion for another time.

Speed Comparison Between Computer Memory and Storage

Let’s take an example of a 3200MHz DDR4 RAM. The data rate will be:

3200MHz * 2 = 6200MT/s

Now, the RAM has a 64-bit bus width, which means it can handle 8 bytes in total.

The data transfer rate of this RAM will be 6400MT/s * 8 bytes = 51200 MB/s.

These are approximate numbers, but comparing this speed to the highest possible levels of SSDs, i.e., ~14,000 MB/s to date, reveals that it is pretty high.

RAM Bandwidth Calculator

Types of Computer Memory

1. Random Access Memory (RAM):

RAM is divided into several categories based on its usage and characteristics, including:

DRAM (Dynamic RAM): A Common type of RAM used in most computers. Requires periodic refreshing to maintain data.

SRAM (Static RAM): Faster and more expensive than DRAM, used in cache memory and registers within the CPU.

DDR SDRAM (Double Data Rate Synchronous Dynamic RAM): An advanced type of DRAM that offers higher data transfer rates.

SDRAM (Synchronous Dynamic RAM): Synchronized with the CPU’s system clock, allowing for faster data access.

2. Read-Only Memory (ROM):

ROM is non-volatile memory used to store firmware and permanent system instructions that the user can’t change.

Data such as boot-up instructions, basic system functions, BIOS, and other essential information is stored in this memory.

ROM is typically used to store critical system settings and firmware that control hardware components.

3. Cache Memory:

As we discussed earlier, cache memory is a small, high-speed memory located directly on or near the CPU.

It stores frequently accessed data and instructions to speed up CPU operations by reducing memory access latency.

Cache memory is divided into several levels (L1, L2, L3) based on proximity to the CPU and size.

4. Virtual Memory:

Virtual memory is a memory management technique used by operating systems to extend the available memory beyond physical RAM.

It uses a portion of the computer’s storage devices (e.g., hard drive or SSD) as temporary storage to hold data and swap it in and out of RAM as needed.

5. Registers:

Registers are the smallest and fastest type of memory used by the CPU to store data temporarily during processing.

They are built into the CPU and are used to hold data, instructions, and memory addresses during program execution.

Types of Computer Storage

Primary Storage:

Please don’t get confused when I say RAM is the primary storage of your computer. This is just because it is the first storage device to interact with the CPU. It is temporary, but nonetheless.

Secondary Storage:

Hard Disk Drives (HDDs): Hard Drives are mechanical devices that utilize magnetic material to store data in the form of changing magnetic orientations.

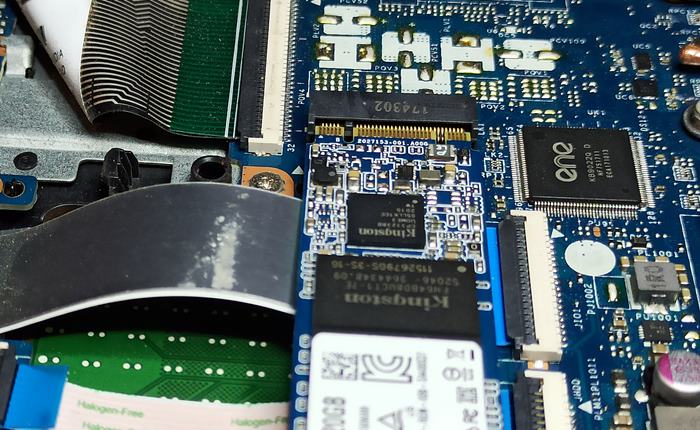

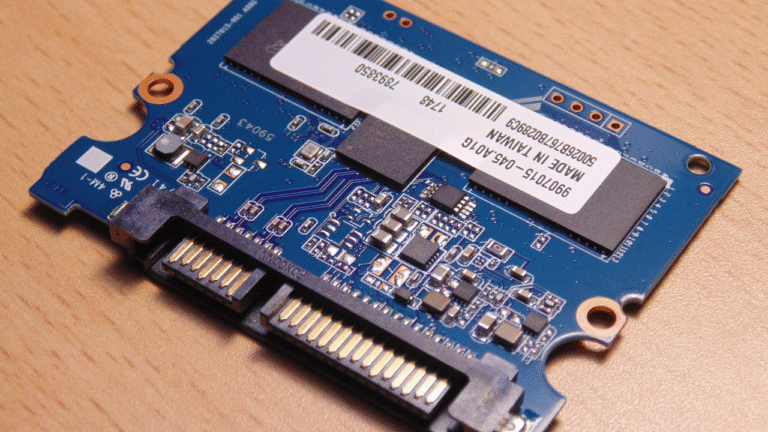

Solid-State Drives (SSDs): SSDs are modern storage devices that use either the SATA or PCIe interface for data transmission. Modern NVMe SSDs offer higher bandwidth, lower latency, and a highly scalable nature. With the help of NAND flash, they achieve very high data transfer rates.

Hybrid Drives (SSHDs): Combination storage devices that integrate both HDD and SSD technologies. SSHDs utilize an SSD cache to store frequently accessed data, thereby improving performance without compromising storage capacity.

External Storage Drives: Portable storage devices that connect to computers via USB, Thunderbolt, or other interfaces. These include external HDDs, SSDs, pen drives, and hybrid drives used for backup, file storage, and data transfer.

Network Attached Storage (NAS): These are specialized storage systems connected to a network, allowing multiple users to access and share files and data. NAS devices often contain multiple hard drives configured in RAID for data redundancy and enhanced performance.

Conclusion

This was a fundamental difference between computer memory and storage. Internally, computers are highly complex and require a solid understanding of both electronics and software. Nobody knows completely how a computer works. However, with the help of abstraction and articles like this, we gain a fair understanding of how these things work. If you enjoy this article, please leave a comment.