Affiliate Disclosure: This post may include affiliate links. If you click and make a purchase, I may earn a small commission at no extra cost to you.

Suppose you have been working on an essential document for hours, and in an unfortunate moment, the power blinked off. When you restart the system after losing control, you discover that the file has been corrupted or, worse, the entire system has become unstable. Journaling in the file system is designed to prevent such scenarios.

The entire storage system is based on some core principles. Data Integrity is one of them, which is taken care of by the file system by keeping track of every piece of data that lives on the disk and ensuring that the data stays consistent, even when something goes wrong externally during read/write operations, since no disks are perfect, or if they are, interruptions like force shutdown, crashes or power failure may occur anytime and take the accountability.

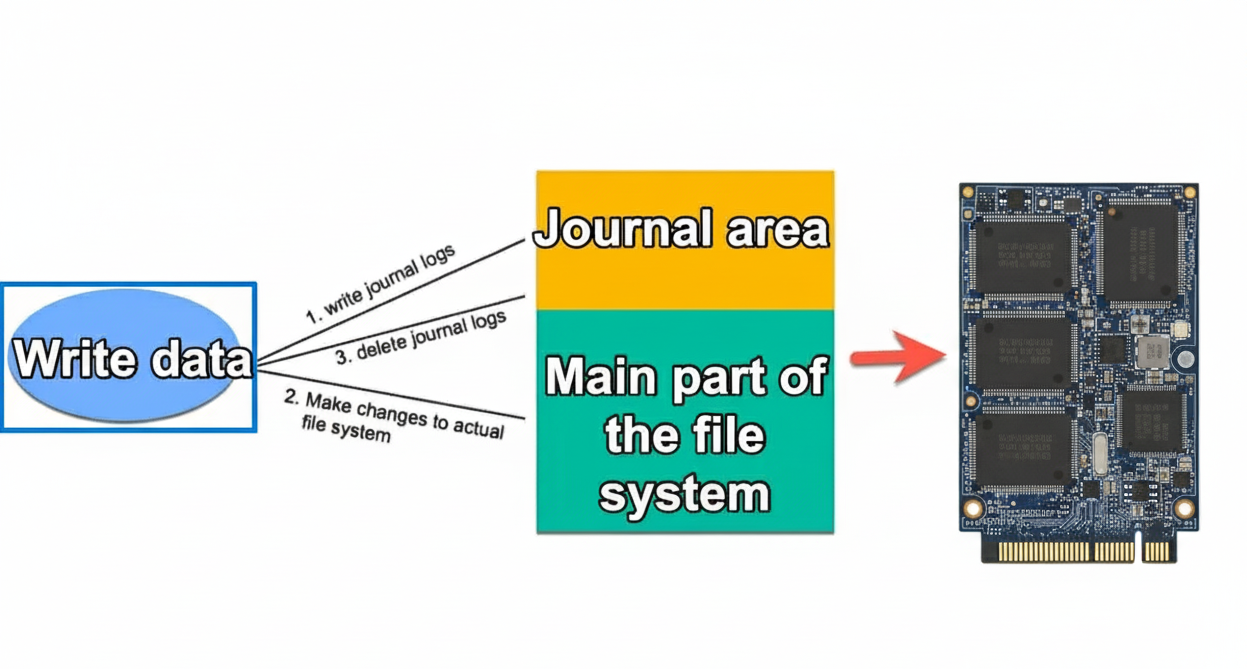

A journal is a dedicated portion of the disk where changes to the data are recorded in a data structure (Journal) before they are permanently applied. You may consider it as a diary for your File System, which notes the changes so that the system can rewind in case of any failure to restore consistency.

Popular File System using Journaling

- NTFS

- ext3/ext4

- APFS

- XFS/JFS

Journaling vs Non-Journaling File System

Based on how they keep records of their data, we can categorize file systems into two types. Journaling and Non-journaling file systems. Let’s understand them first.

1. Non-Journaling File System

Non-journaling file systems do not keep a log of changes. So, whenever a file is modified, changes are written directly to disk. It helps with faster write performance and lower disk utilization. The file structure is simpler and less complex, which is suitable for flash drives, memory cards, and embedded systems. However, these file systems come with serious drawbacks because of a higher risk of corruption.

If a crash happens mid-write, the file system can become corrupted or require a lengthy repair (e.g., chkdsk in Windows or fsck in Linux). These drawbacks make these file systems unsuitable for large-scale and mission-critical systems.

Examples: FAT32, exFAT, early versions of ext2.

2. Journaling File System

As the name suggests, these file systems maintain a journal (log) of pending changes before applying them to the central file system. This prevents corruption and reduces recovery time. If a crash happens, you don’t have to scan the whole disk. The system just replays or discards the journal entries to maintain the file system’s consistency.

Examples: NTFS (Windows), ext3/ext4 (Linux), APFS (macOS), XFS, JFS.

How Journaling Works?

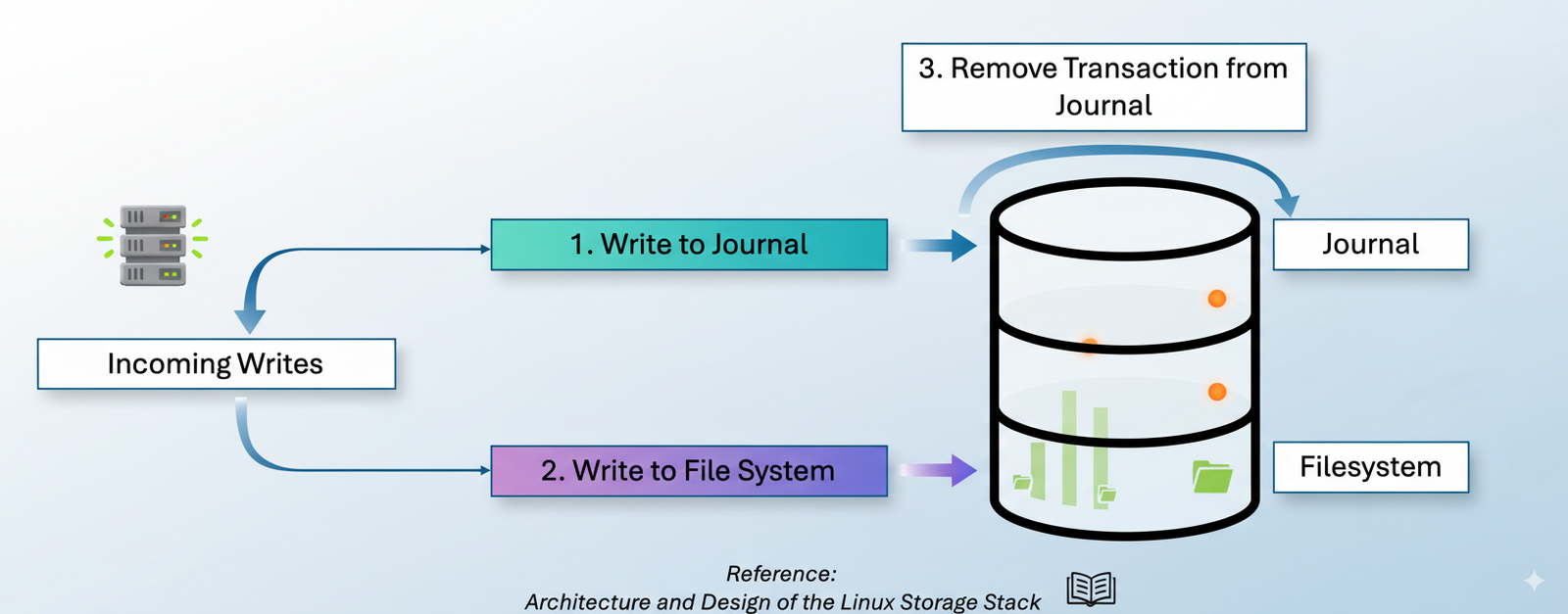

As discussed above, the File System records any changes that are made to the file in a special log called Journal before it actually does it. It’s important to understand how things go behind the scenes.

- A program is asked to create/modify/delete a file. For instance, a program is requested to save a 10 MB video file to your documents folder.

- The File System creates an entry in the journal with the following things.

- File Path

- New data blocks to be used

- Metadata changes

- The journal is marked as a change currently in process, so that in case of a crash, the operating system recognizes which operation wasn’t completed.

- Then, the actual data is written to the blocks.

- Once the writing is complete, the journal marks the operation as done and restores the journal space for the next operation.

Crash Scenario

If the system actually crashes, on recovery, the file system checks the journal. If it finds incomplete entries, it replays them or rolls them back to discard partial changes to maintain consistency.

Why Journaling?

There is always a possibility of an interruption between writes to leave the data structure in an intermediate state.

Deleting a file is not a single operation; it’s made up of multiple steps.

- Remove the entry in the directory.

- Free the inode.

- Free the data blocks.

If a crash happens mid-way.

After step 1, before step 2: The inode still exists, but there is no directory pointing to it.

After step 2, before step 3: The inode is gone, but the disk blocks still hold the data; thus, the blocks are useless and unusable.

If step 3 happens before step 1: The data blocks already in use are reallocated to the new files, causing leakage and corruption.

If step 2 happens before step 1: It is a dangling reference, which means the file appears to exist, but it has no valid node.

To overcome these inconsistencies, tools like fsck were traditionally used to scan the entire system only after a crash and fix any issues. However, the disadvantage of it was that the process was slow, especially on a larger disk with low speed. To overcome this situation, the journaled file system was introduced. The core principle of it was write-ahead Logging.

“Don’t make a change unless you’ve logged your intent to do so.”

Types of Journaling

Different File Systems’ journaling works in different ways. They create balance between speed, safety, and storage efficiency differently. The type of journaling determines how much extra space and time the process takes and what exactly is recorded in the journal. Given below are the main types of journaling.

1. Metadata-only Journaling

It records changes in the file system structure, such as file names, permissions, and timestamps, etc. It doesn’t record the actual content in the data.

| Pros | Cons |

|---|---|

| It is faster because of less journaling | File system remains intact, but the files written before the crash may be corrupted or contain old data. |

| The size of the journal is small, so less overhead. | Less protection for user data, only the structure is safe. |

Example of Metadata Journaling

ext3 in Linux.

2. Full Data + Metadata Journaling

In this type of journaling, the metadata as well as the actual file data is recorded. Both of them happen simultaneously. First, file data is written to the journal, then the metadata, and only after that, both are written to the primary disk area.

| Pros | Cons |

|---|---|

| The safety is maximum and there are no corrupted files after the recovery. | The write is slower since everything is written twice. |

| Guarantees consistency of files (no “garbage” contents). | The storage required is also high. |

| Best for critical systems (databases, financial apps, logs). |

Example of Full Data + Metadata Journaling

NTFS in Windows, ext3 (with data=journal) mode.

3. Ordered Journaling

In ordered journaling, only the metadata is recorded to the disk, but it happens only after the file is written to the disk.

| Pros | Cons |

|---|---|

| It is the balance of speed and safety. | There is still some probability of recent changes being lost, but there is less risk of corruption. |

| It reduces the chance of old or garbage data in the file after a crash. |

Example of ordered journaling

Linux ext4 default mode.

4. Writeback Journaling

It records metadata in a journal, but file data can be written to the disk before or after the metadata updates.

| Pros | Cons |

|---|---|

| The performance is the highest in all journaling modes. | The risk of old or mixed data after a crash is high. Although the file structure remains intact. |

Example of Writeback journaling

The optional mode provided by ext3 and ext4 (data=writeback)

How Journaling Affects Storage?

Journaling affects the storage in multiple ways.

Journal takes up space on the disk to be stored. For Example, ext4 by default takes around 128 MB for journaling, which can also increase in case of a large partition. The current explicit space is fixed, though, but it’s still a storage overhead.

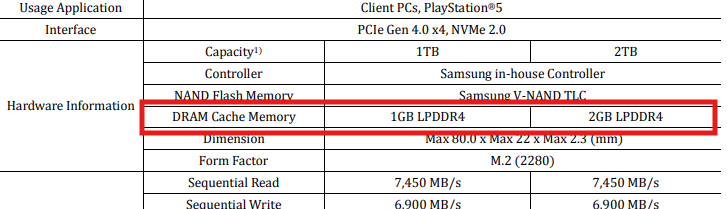

Data, or the metadata, especially in the case of full data journaling, is written twice: once to the file system area and once to the journal. This causes write amplification.

Note: Journaling may slow down the write performance due to extra input/output, but in general, it is usually worth it because it reduces the risk of corruption.

Real World Impact:

- On a 1 TB SSD, the journal overhead is small, not more than a few hundred MBs.

- On small devices such as a microSD card, journaling might be noticeable.

- On SSD/NVMe, journaling is a healthy option.

Conclusion

Journaling is a vital data structure in any storage device for maintaining data consistency after recovery from crashes, unexpected hardware failures, or sudden shutdowns. By keeping a record of all changes before committing them to the central file system, journaling not only ensures that corrupted files can be identified but also ensures that they are rolled back and reapplied safely.

This reliability, however, comes with a tradeoff – the additional disk space, especially in the case of embedded systems and small storage devices, may compromise write performance due to the extra operations involved in logging.