DRAM SSDs are known for their high performance and reliability. On the other hand, DRAM-less SSDs are a budget-friendly option for users who want to prioritize cost over peak performance. These individuals are generally good, albeit with slightly lower speeds, in exchange for savings. Low-end systems can work pretty well even with the DRAM-less SSDs. The selection also depends on the purpose of your drive or what you want to do on your computer.

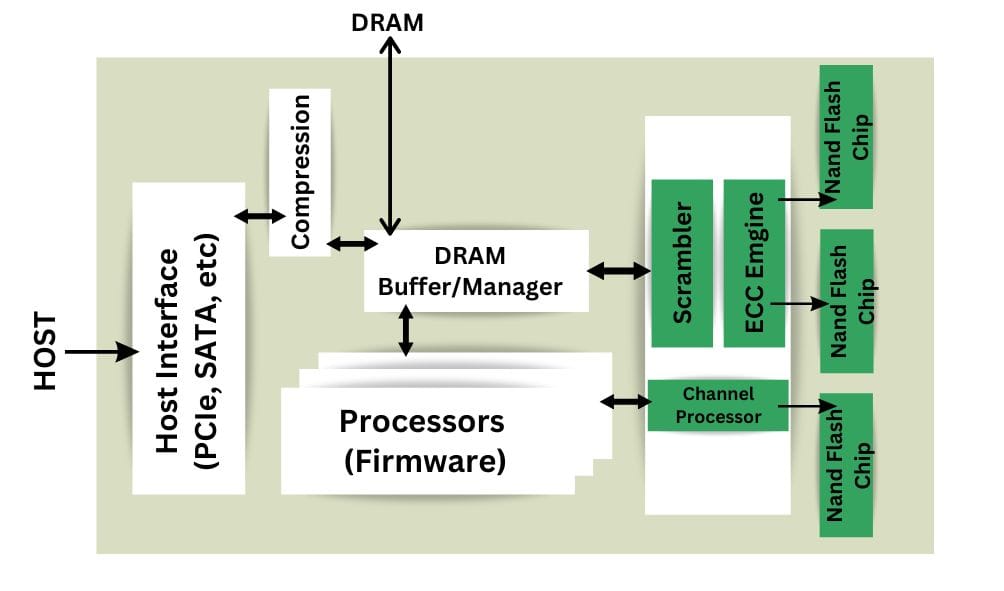

DRAM SSDs will have their own dedicated DRAM chip integrated directly into the SSD itself. It will serve many purposes, including read/write caching, buffering, FTL management, garbage collection, etc. However, in the DRAM-less SSDs, you won’t see any DRAM chip on the drive.

DRAM-Less SSDs, following the release of NVMe 1.2, can utilize the system’s RAM as their DRAM through a process called HMB (Host Memory Buffer). The SSD is assigned this memory through DMA, or Direct Memory Access. The size of HMB is tiny, ranging from 20 to 64 MB. It doesn’t completely replace the DRAM but acts as a small cache for a part of the mapping table.

DRAM SSDs have undeniable benefits, while DRAM-less SSDs can also get most of the work done without many issues. Unless you are a performance seeker or have heavy workloads on your drive, the DRAM-less SSD can work quite well. However, DRAM SSDs offer several advantages, which we will discuss later in the article.

Why is DRAM used in SSDs? Its benefits

DRAM is a type of volatile or temporary memory that is primarily used in conjunction with CPUs. Compared to NAND Flash chips (used in SSD storage), DRAM is significantly faster. DRAM is based on capacitor storage, whereas NAND Flash memory is based on transistor storage. If your SSD features a DDR5 DRAM chip, it can achieve a data transfer speed of around 25,000 MB/s. This speed is utilized on SSDs to handle many operations.

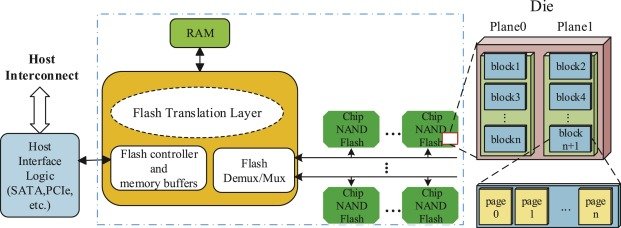

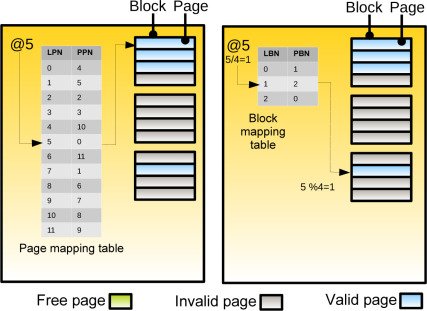

1. Flash Transition Layer (LBA to PBA)

The first application of DRAM is to store the data mapping tables. This table is also called the Flash Transition Layer. The purpose is to translate the Logic block address to physical locations on the NAND flash. To understand it better, the operating system views storage as a linear array of blocks, similar to numbered pages in a book. But NAND flash doesn’t store data in a straight line. It uses blocks and pages. So, the data is written in pages but erased in large blocks. Also, you can’t overwrite data directly; it must be erased first. So, to allow the operating system to use the NAND flash in the way it wants, the SSD has to keep track of the data locations and this is where FTL plays its role.

To locate the data and keep track of everything, the controller needs to know its location, which is stored in this table. It also keeps track of the available free blocks on the flash memory chip. Quick access to this table and its fast operation can streamline the process, helping to increase read and write speeds.

With the help of DRAM, the latency of data access is reduced because the controller doesn’t have to wait long to find the data location.

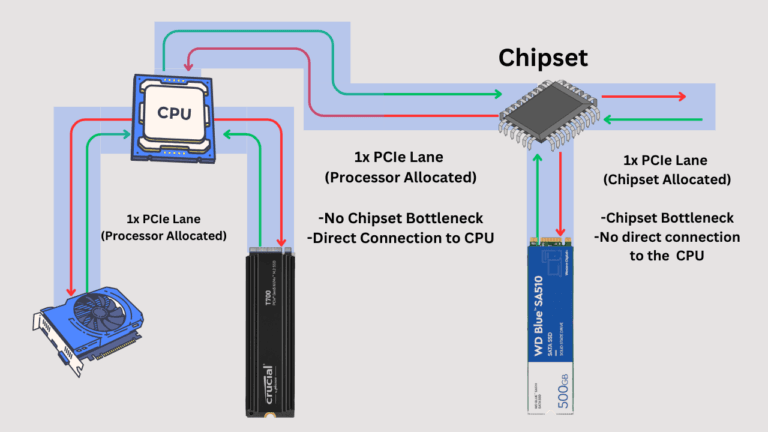

If the SSD is using HMB (host memory buffer), it would increase latency because communication must be done through the PCIe/NVMe interface. However, it is a much better option than using raw NAND flash for FTL. This was happening before the release of NVMe 1.2. However, most SSDs before that had DRAM, and now, with HMB reducing manufacturing costs, companies are releasing them at a rapid pace. And honestly, HMB is a better replacement and an innovative technique for reducing SSD costs while offering acceptable performance.

2. Read Caching and Buffering

As a read cache, DRAM chips are used to store frequently used data, also known as hot data. This increases the system’s performance because the read latency is reduced. In caching, copies of this hot data are stored to minimize the access time. If you access the same file or app repeatedly, its data may remain in the DRAM to reduce the time it takes to access it from the NAND flash. This is helpful for app loading, accessing OS boot files, and other frequently used documents.

The DRAM has an access time from 10 to 100 nanoseconds. NAND flash has a latency of 50 to 100 µs. So, DRAM is 500 to 1000 times faster than NAND in most cases. So, any data stored in it would be readily available for the CPU. Especially in random read operations, this can be helpful.

DRAM isn’t used as write cache. For this, SLC cache is utilized in most SSDs. This takes the incoming heavy streams of data rapidly and then stores them in the NAND flash when the system is relatively free.

3. Help in running algorithms

DRAM helps in garbage collection by keeping track of which data needs to be erased or moved quickly. When combined with the TRIM command, the DRAM can make this process even smoother. It also helps in running wear-leveling algorithms by increasing the speed of identifying blocks that are not used frequently.

Additionally, the DRAM stores metadata, including error correction codes and bad block information, which aids in over-provisioning and the TRIM operation.

An SSD Without the DRAM

An SSD without its own DRAM will either utilize part of the NAND Flash memory or the system’s memory. If it uses NAND flash memory, it will operate in SLC mode (SLC stores only one bit per cell). Again, any SSD without DRAM and with NVMe 1.2 and later variants will use HMB (Host Memory Buffer).

An important point to note is that the SLC-Cache or Pseudo-SLC Cache is used solely for caching, not for storing the FTL. At the same time, the system RAM in HMB mode can be utilized for all the functions that a real SSD DRAM can perform. In the absence of DRAM and HMB, the pseudo-SLC may be used for caching, while the FTL is stored on ordinary NAND flash, which will be slower than both DRAM and HMB.

All these features, such as write caching, algorithms, read caching, and table mapping, will still run, regardless of whether an SSD has DRAM or not. It is just that the speed and efficiency of these operations will be reduced.

Because the DRAM is installed directly on the SSD, its latency is reduced. Additionally, the connection is direct, and the SSD is programmed to utilize its DRAM efficiently.

Drawbacks of a DRAM-Less SSD

Because communication must go through the PCIe or SATA bus, HMB increases latency and reduces performance. Although DMA is used to reduce CPU interference, latency rises significantly. Also, the system’s RAM capacity is compromised, especially on low-end computers. In heavy-load scenarios, the interfaces can become congested with ongoing processes from other components, resulting in degraded SSD performance. If the system has slow RAM, the SSD has to suffer the circumstances.

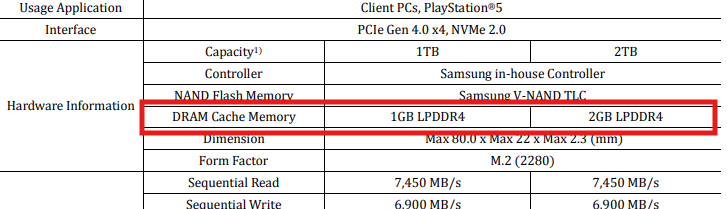

The size of HMB is limited, typically ranging from 20 to 64 MB of host RAM. By comparison, DRAM SSDs often have 1GB of DRAM per 1TB of storage. HMB will only work if the operating system supports it. It is supported by Windows 10 and later versions, as well as Linux versions 4.14 and later. MacOS doesn’t natively support HMB.

This study found that DRAM-less SSDs without HMB can have up to 60–70% lower random read performance than DRAM-based SSDs. However, the random read performance improves by 30–50% when we use HMB, becoming nearly equal to sequential read speeds in the compared DRAM SSDs.

In heavy workloads, DRAM-less SSDs had up to twice the bandwidth in both random and sequential reads. With HMB enabled, Random read performance improved by 40–60%, but write performance changed only slightly.

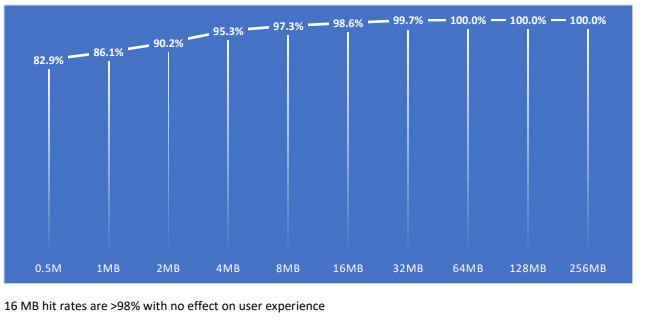

A 512 GB SSD needs ~512 MB for full FTL cache. In this study, it was found that the HMB space ranged from 64 MB to 480 MB. The effective caching threshold is observed around 4 KB per mapping unit.

Benefits of DRAM-Less SSDs

1. Lower Price

DRAM chips are expensive, and if your drive doesn’t have one, it would impact its price. DRAM-Less SSDs are generally cheaper than DRAM SSDs with the exact specifications. Therefore, if SSD price is a significant factor, DRAM-less SSDs can be a perfect option.

2. Lower Power Consumption

DRAM chips require additional power to function, primarily because they are based on capacitors. This reduction in power consumption is a benefit for SSDs. Although the difference is significant, it can still be beneficial, particularly in laptops and mobile devices.

3. Sufficient performance on low-end systems

No matter how much compromise you have to make when going for a DRAM-less SSD, the performance is still better than that of SATA SSDs. The performance is sufficient primarily for average systems. For moderate workloads and less demanding applications, these drives will deliver good performance.

However, if your work involves heavy read/write operations, you should opt for a drive with DRAM. For tasks such as video editing, AI, 3D modeling, and mining, you should select a DRAM SSD.

4. Less Heat Generation

DRAM is one of the major contributors of heat in SSDs after the controller and NAND Flash chips. Although this heat is worth its cause, you have the advantage of a comparatively cooler SSD. Without a DRAM, the controller design will be simpler.

DRAM vs DRAM-Less SSD: Which one to choose?

See, if you are conscious about the longevity, performance, and reliability of your drive, you should go for the DRAM SSD. It will provide you with numerous advantages in both the long and short term. Your writing performance will improve, and your day-to-day tasks will benefit as a result. A DRAM-less SSD will degrade more quickly than a DRAM SSD.

However, if your system has a light workload and you mostly do read operations, a DRAM-less SSD can be a suitable option for you. The price will be lower, so you have another advantage. DRAM-Less SSDs also consume less power and run cooler.

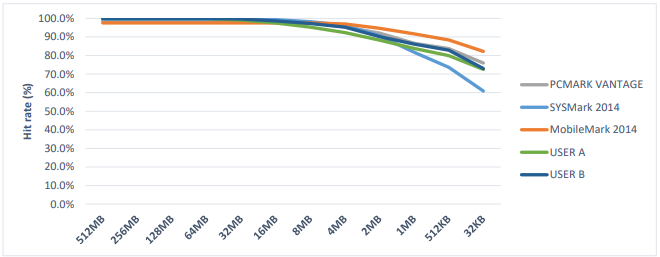

HMB is becoming increasingly advanced, and we are achieving a hit rate of up to 98% with as little as 16MB of HMB.

| Feature | DRAM SSD | DRAM-less SSD |

|---|---|---|

| Cache Type | Dedicated DRAM cache | Uses Host Memory Buffer (HMB) or SLC cache |

| Performance | Generally better performance | Typically lower performance, especially in random input/output operations |

| Latency | Slower when the SLC cache is full or no cache is used | Higher latency due to HMB or direct NAND access |

| Write Speed | Higher write amplification impacts the NAND lifespan | Slower when SLC cache is full or no cache is used |

| Read Speed | Faster due to efficient caching | Higher write amplification impacting the NAND lifespan |

| Endurance | Higher write amplification impacts the NAND lifespan | Higher write amplification, impacting the NAND lifespan |

| Reliability | More reliable with good error correction and caching | Less reliable in high-load scenarios due to less effective caching |

DRAM vs DRAM Less SSD for gaming

An SSD with DRAM will benefit a gamer the most. This is because there is generally repetitive data utilized while playing games. The read cache in the DRAM will give a clear advantage over a DRAM-less SSD. DRAM SSDs will be helpful in quick asset loading and game booting.

DRAM-less SSDs, on the other hand, will be suitable for budget gamers. If you’re willing to accept slightly longer boot times and reduced performance, a DRAM-less SSD is an appropriate option.

Don’t compromise on NAND Flash for DRAM.

I would not recommend buying a QLC SSD with DRAM while rejecting a TLC or MLC SSD without DRAM. If your drive has TLC or MLC NAND Flash but uses HMB instead of DRAM, consider purchasing it over a QLC SSD with DRAM. Samsung 980 could be a good example here with MLC NAND and no DRAM. However, it is superior to any QLC SSD with DRAM in terms of reliability.

I hope this helps!